Greetings investigators! In this blog post, we will explore some of the information that a person can deduce by exploring a website. Specifically, we will go over some of the things that could help you identify if two sites are sisters – that is to say, they are owned by the same entity.

If you are reading this before LinkScope Client version 1.2.0 is available, and you wish to follow along, you may need to clone the LinkScope repository in order to get the latest version of the software. We will be using some Resolutions that were created after version 1.1.1 was released. If you happen to be new to LinkScope, there is an introductory post in our blog that goes over the basics.

Our scope for today will cover a relatively well-known misinformation website called ‘Natural News’. How well-known exactly? Well, Wikipedia includes it in their list of fake news websites, and it even has its own Wikipedia entry. The Wikipedia pages themselves contain some background information about the site and its owner. To summarize the parts relevant to our investigation, the site was banned from Facebook after it was discovered that it was using content farms to boost its popularity. The owner tried to circumvent the ban by creating a lot of new websites, one for each topic that the Natural News website covers.

Our objective is to have a look at a few of the sister sites of Natural News, and see what indicators we can uncover that reveal that they are operated by the same entity. In this case, we already know what the verdict will be, but this is useful practice for cases where the relation between different sites in a disinformation network is not so obvious. As of the date of writing of this blog post, the sites covered are operational, and all the indicators that the article goes over can still be observed.

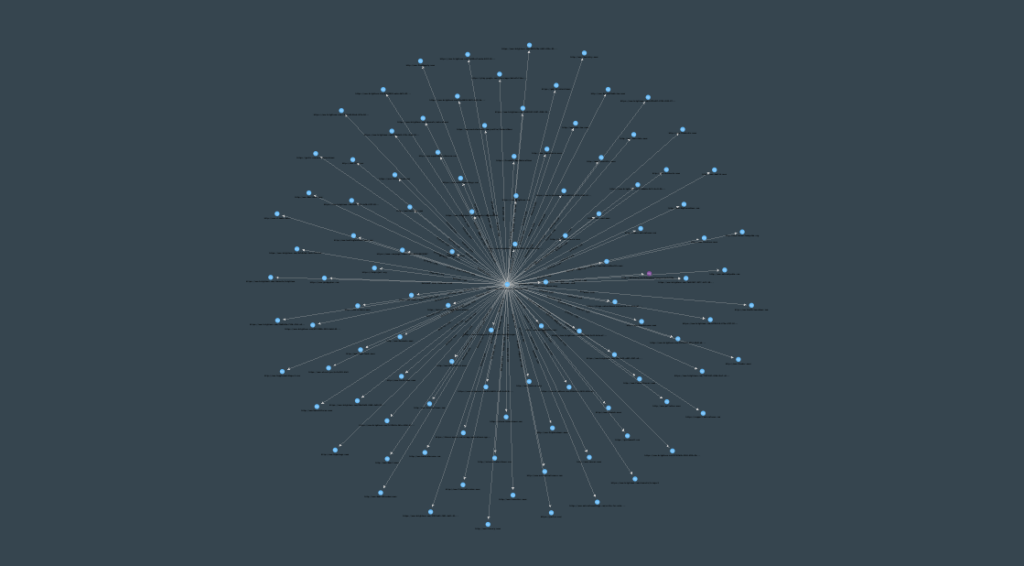

Of course, we could simply visit Natural News and scrape the URLs of all the sites it prompts readers to visit. For the sake of this example however, we are not going to do that. Just as well, because there’s a lot to go through:

Before we begin, we should note that there are a few ways to reveal that some sites are owned by the same person. The most conclusive and straightforward way of doing so is checking out the tracking codes that each site uses. Tracking codes are short strings of characters that are sent to analytics websites when a user visits a site. The request made by the user contains some information that allows owners of sites to see how many users visit their website, what pages they visit, and so on and so forth.

Most commonly used are Google tracking codes, which come in several formats. Google Analytics IDs for example begin with “UA-” and then are followed by a string of numbers, while AdSense IDs begin with “ca-pub-“, and then, you guessed it, are followed by a string of numbers. Some less common Google tracking codes include the Google Tag Manager tracking codes, which begin with “GTM-” and are followed by a set of alphanumeric characters, and Google Measurement IDs, which begin with “G-” and are followed by a set of alphanumeric characters.

As an aside, Google has announced that Universal Analytics will be discontinued in 2023, and Google Analytics 4 (Measurement IDs) will take their place.

One last thing before we dive into this: If you want to follow along, you need to install the Extra Module Pack to obtain some of the resolutions we’ll be using. To do this, on the top bar, navigate to Modules -> View Module Manager. In the Module Manager, scroll down until you see the Extra Module Pack, and select it. You can double-click to check what modules it includes. Click the “Install Module Pack” button to install it. Once done, you’re ready to go.

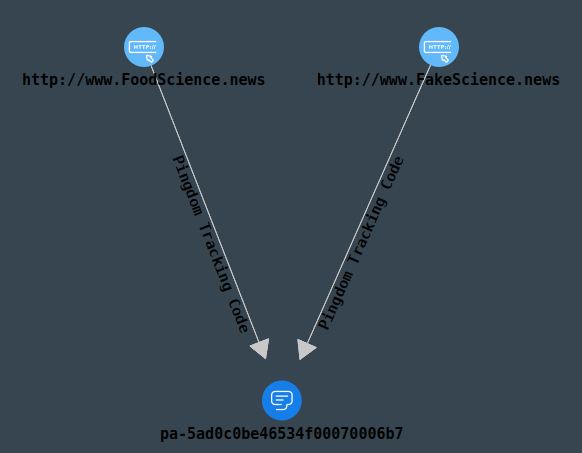

Alright, let’s start the investigation! The specific sites we want to have a look at are the “FoodScience” and “FakeScience” sites, at “http://www.FoodScience.news” and “http://www.FakeScience.news” respectively. Let’s start by creating two Website entities to represent these sites:

Checking for tracking codes manually can be a pain – they could be in the source code of the page you visited, or hidden away in some of the content that was loaded alongside it. The code could even have been dynamically constructed by a JavaScript script, so you wouldn’t find it if you ran a naive text search of the page’s source code.

The best way to discover the tracking codes is to visit the page, and observe what web requests are made by the browser. The tracking codes will be included in those requests – your browser will contact Google’s servers (or the servers of the relevant tracking code provider), and the tracking code belonging to the website will be visible in the request. This method offers the highest degree of success when it comes to discovering a website’s tracking codes. It is not as fast as crawling source code with non-JavaScript capable crawlers, but it will never present any false negatives; that is, you will be able to see all the tracking codes that a site uses, even if they are not spelled out in the source code.

LinkScope has a resolution that does just that. Under the “Website Tracking” category, double-click the “Extract Tracking Codes” resolution after selecting both website entities. After a brief wait, we discover that both sites share the Pingdom tracking ID “pa-5ad0c0be46534f00070006b7”:

Since each tracking ID belongs to one account with an analytics provider, we can state with a high degree of confidence that both these sites are managed by the same entity. There are several websites that offer a tracking ID search engine, such as SpyOnWeb and AnalyzeID, which can be used to search their databases for any websites that have that specific tracking ID. Do make sure to verify any results you get – sites can update their tracking IDs and even the analytics platforms they use at any point, so some results could be outdated.

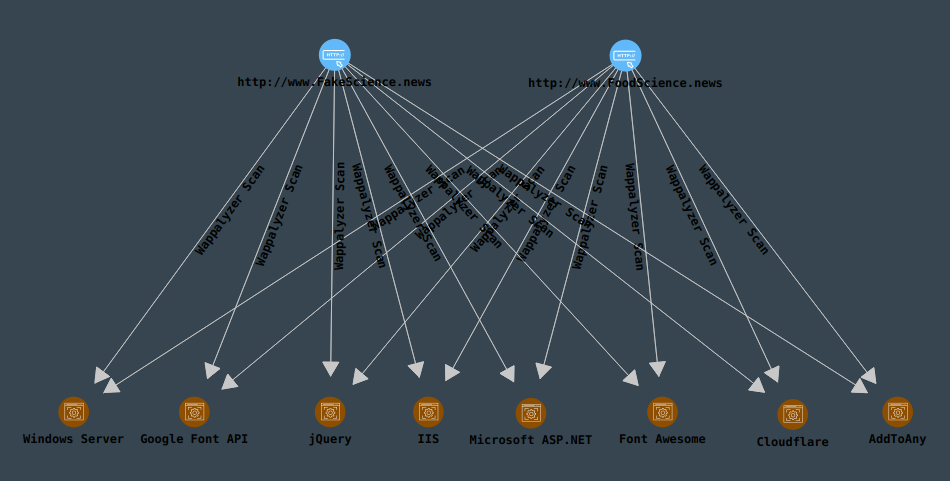

We can take this a step further. Another way to identify whether two websites are owned by the same entity is by inspecting the technologies that they use; websites belonging to the same entity are likely to use the same technologies, as maintaining different technology stacks can be expensive. Inspecting the technologies used by a website can be done in a number of ways. One such way involves using a utility like ‘Wappalyzer’. Wappalyzer scans the source code of websites to see what technologies are referenced. Overall, this is a weaker indicator than tracking codes, and does not signify sister sites by itself. However, the circumstantial evidence that two sites use exactly the same technologies could be significant in an investigation.

To do this, we create a new canvas (shortcut: Ctrl-N), select the “FoodScience” and “FakeScience” website entities, right-click and select “Send Selected Entities to Other Canvas” in the menu that pops up. We send these entities to the new canvas we created, so that the results of each of our operations are displayed in different canvases. This keeps our investigation neat and tidy.

In the new canvas, we select the website entities, and under the “Website Information” resolution category, we double-click to run the resolution “Wappalyzer Website Analysis”. Several different technologies are identified, all of which are shared between both sites:

At this point, it is pretty obvious that both these sites are managed by the same entity. Visiting the sites themselves supports this conclusion: the sites look almost identical.

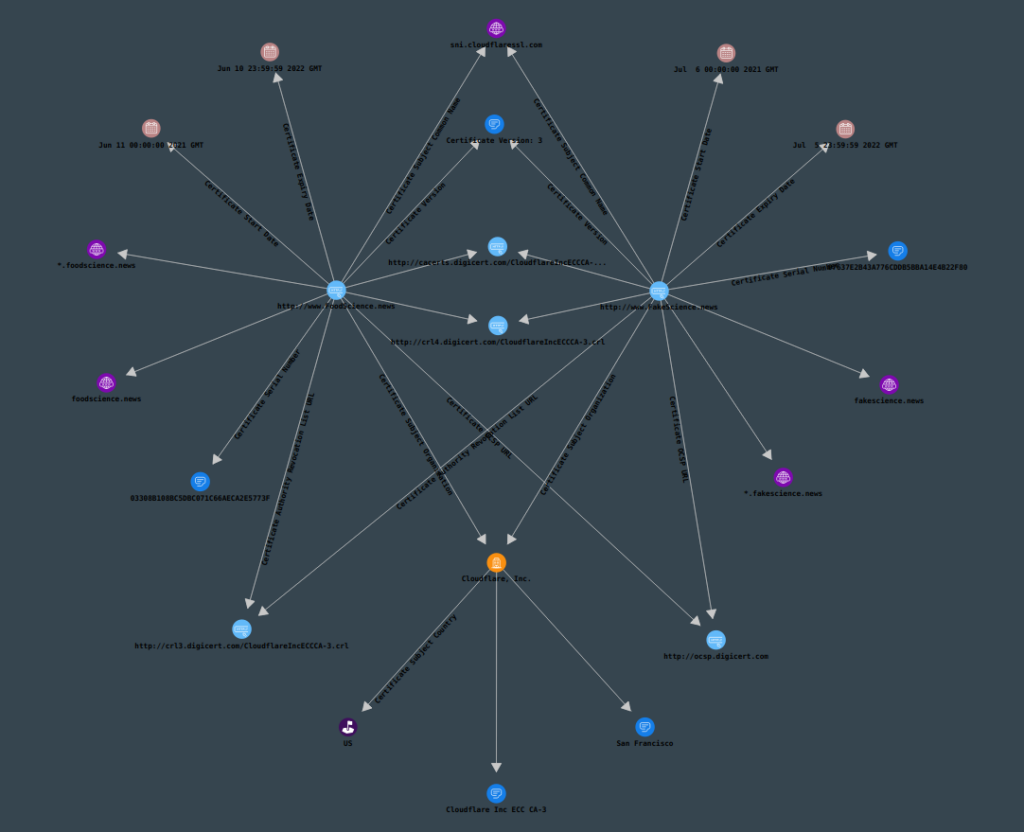

As a bonus, there is one easy, albeit inconsistent, way to get information about a particular site: Inspecting the site’s certificate. Sometimes, this contains information about the organization that the site belongs to. We could check both sites’ certificates with the resolution “Analyze Website Certificate”, under the “Network Infrastructure” resolution category.

In this case, we don’t gain much information; only that both sites use certificates signed by CloudFlare. Inspecting the domain’s certificate can sometimes give you a lot of very useful information, so it’s always a good idea to do so whenever you are able to.

That wraps up this blog post on tracking website owners. We hope that you have found this educational. If you enjoyed reading this, make sure to keep an eye on our blog, as we will be posting more news, tips and tutorials on a variety of topics!

Best Wishes,

AccentuSoft